Design and Create Tests for Data Pipelines – Monitoring Azure Data Storage and Processing

Azure Monitoring Overview, Exams of Microsoft, Microsoft DP-203The process for designing and creating tests for Azure Stream Analytics is the same from an Azure DevOps perspective as it is for batch processing, in that you create a test plan made of test cases that contain the steps required to complete the test case. The brainjammer Azure Stream Analytics job you created in Exercise 3.17 consists of three components: an input, a query, and an output. Breaking each of those components into what they are expected to do will provide the detail for each test case. The following is a breakdown of the expected outcomes from each component:

- Input: brainwaves

- Capture the number of received brain wave readings on the Azure Event Hubs.

- Capture the number of received brain wave readings on the Azure Stream Analytics job.

- Are both numbers the same?

- Query

- Are there any errors, backlogs, or watermark delays?

- Output: powerBI

- Are the results of the query being streamed to Power BI?

The exercises regarding Azure Stream Analytics in Chapter 7 were all performed on the live job in the portal. You should not be making changes like that on a production job that is generating business insights, due to the disruption doing so would cause. Instead, you should create another, identical environment to perform your testing of any changes to the input, query, or output. After performing a test, you can use the steps in the preceding list to determine if the changes were successful. If they were successful, you would then commit the changes to your source code repository. Once code sets are merged and testing is signed off, the CI/CD process to move the changes into production is triggered.

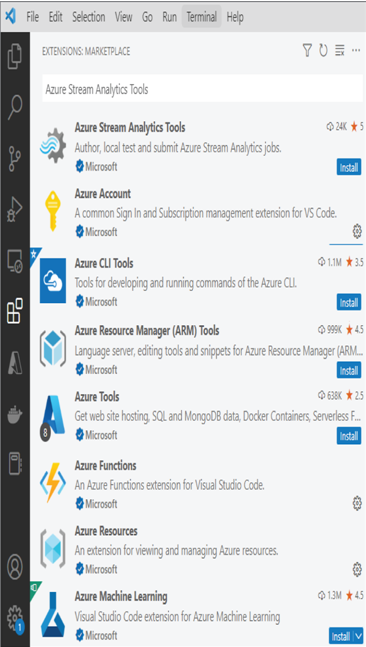

To begin the integration of Azure Stream Analytics into a release pipeline, take a look at the Visual Studio Code blade in the Azure portal for your Azure Stream Analytics job. There are links to download Visual Studio Code and to manage a job from the IDE, and instructions on how to use npm package to set up the CI/CD pipeline. After your Azure DevOps project is set up, the next step of the release process is to install the Azure Stream Analytics Tools extension into the Visual Studio Code IDE. Figure 9.30 represents how this looks in the IDE, version 1.73.1.

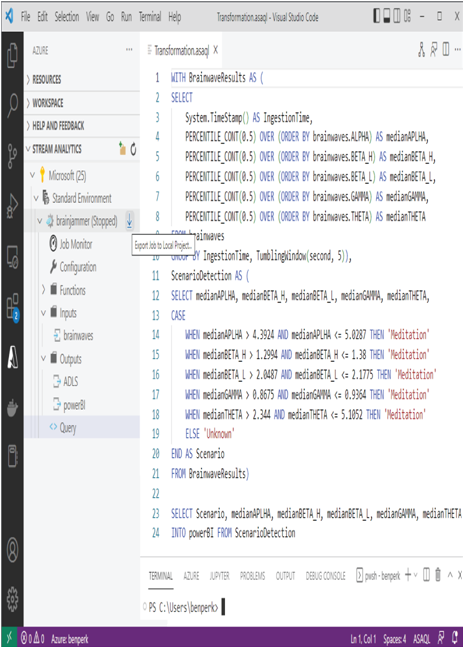

Once integrated, you can export the job query locally, as shown in Figure 9.31, and make modifications to it.

Once the job query is local, you can make changes to it and perform testing against it. Once you are happy with the new changes, you commit the change to the Azure DevOps Repo, which triggers the CI/CD process. The provisioning, creation, and configuration of an end‐to‐end CI/CD process is outside the scope of this book. The complexities of creating such a procedure are worthy of their own book. The important points are that you should not modify your query that is running a live job and that you integrate Azure Stream Analytics jobs into a CI/CD pipeline using Visual Studio code and the mentioned extension.

FIGURE 9.30 Azure Stream Analytics Tools extension in the Visual Studio Code blade

FIGURE 9.31 An Azure Stream Analytics job query in the Visual Studio Code blade